Alle Bestellungen werden in Deutschland gefertigt, versandt und unterstützt

Alle Bestellungen werden in Deutschland gefertigt, versandt und unterstützt

Alle Bestellungen werden in Deutschland gefertigt, versandt und unterstützt

Alle Bestellungen werden in Deutschland gefertigt, versandt und unterstützt

Dual NVIDIA 72-core CPUs on a Grace™ CPU Superchip Series processors, Up to 480GB LPDDR5X onboard memory, 2000W Redundant PSU, 8x front hot-swap E1.S NVMe drive bays.

Single NVIDIA 72-core CPUs on a Grace™ CPU Superchip Series processor, Up to 480GB LPDDR5X onboard memory, 2000W Redundant PSU, Up to 8x Hot-swap E1.S drives & 2x M.2 NVMe drives.

Liquid Cooled, Single NVIDIA 72-core CPUs on a Grace™ CPU Superchip Series processor, Up to 480GB LPDDR5X onboard memory, 2000W Redundant PSU, Up to 8x Hot-swap E1.S drives & 2x M.2 NVMe drives.

Dual Node, Single NVIDIA 72-core CPUs on a Grace™ CPU Superchip Series processor, Up to 480GB LPDDR5X onboard memory, 2700W Redundant PSU, Up to 4x Hot-swap E1.S drives & 2x M.2 NVMe drives per node.

Dual node, Liquid Cooled, Single NVIDIA 72-core CPUs on a Grace™ CPU Superchip Series processor, Up to 480GB LPDDR5X onboard memory, 2700W Redundant PSU, Up to 4x Hot-swap E1.S drives & 2x M.2 NVMe drives per node.

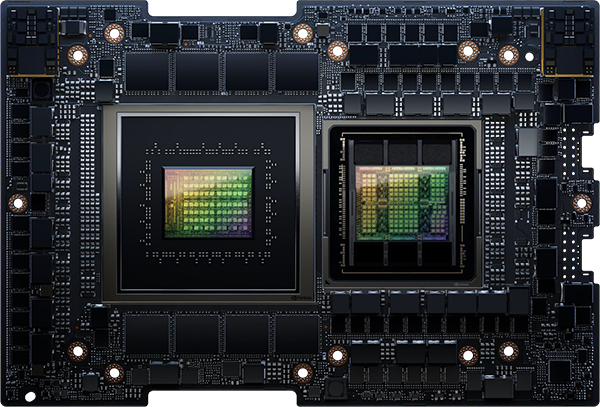

The NVIDIA Grace Hopper™ architecture combines the innovative power of the NVIDIA Hopper™ GPU and the flexibility of the NVIDIA Grace™ CPU into one advanced superchip. This integration is facilitated by the NVIDIA® NVLink® Chip-2-Chip (C2C) interconnect, ensuring high-bandwidth and memory coherence between the two components. This unified architecture maximises performance and efficiency, enabling seamless collaboration between GPU and CPU for a wide range of computing tasks.

The NVIDIA Grace Hopper™ architecture combines the innovative power of the NVIDIA Hopper™ GPU and the flexibility of the NVIDIA Grace™ CPU into one advanced superchip. This integration is facilitated by the NVIDIA® NVLink® Chip-2-Chip (C2C) interconnect, ensuring high-bandwidth and memory coherence between the two components. This unified architecture maximises performance and efficiency, enabling seamless collaboration between GPU and CPU for a wide range of computing tasks.

NVIDIA NVLink-C2C is a memory-coherent, high-bandwidth, and low-latency interconnect for superchips. At the core of the GH200 Grace Hopper Superchip, it provides up to 900 gigabytes per second (GB/s) of bandwidth, which is 7 times faster than PCIe Gen5 lanes commonly used in accelerated systems. NVLink-C2C allows applications to use both GPU and CPU memory efficiently. With up to 480GB of LPDDR5X CPU memory per GH200 Grace Hopper Superchip, the GPU has direct access to 7X more fast memory than HMB3 or almost 8X more fast memory with HBM3e. GH200 can be used in standard servers to run a variety of inference, data analytics, and other compute and memory-intensive workloads. GH200 can also be combined with the NVIDIA NVLink Switch System, with all GPU threads running on up to 256 NVLink-connected GPUs and able to access up to 144 terabytes (TB) of memory at high bandwidth.

The NVIDIA Grace CPU offers twice the performance per watt compared to traditional x86-64 platforms and stands as the fastest Arm® data center CPU worldwide. It's designed for high single-threaded performance, high- memory bandwidth, outstanding data-movement capabilities. The NVIDIA Grace CPU combines 72 Neoverse V2 Armv9 cores and up to 480GB of server-grade LPDDR5X memory with ECC, it achieves an optimal balance between bandwidth, energy efficiency, capacity, and cost. Compared to an eight-channel DDR5 design, the Grace CPU's LPDDR5X memory system delivers 53 percent more bandwidth while using only one-eighth the power per gigabyte per second.

The H100 Tensor Core GPU is NVIDIA’s latest data center GPU, offering a significant performance boost for large-scale AI and HPC compared to the previous A100 Tensor Core GPU. Built on the new Hopper GPU architecture, the NVIDIA H100 introduces several innovations:

The GH200 Grace Hopper Superchip marks the first genuine mixed accelerated platform tailored for HPC tasks. It boosts any application by leveraging the strengths of both GPUs and CPUs, all while offering the simplest and most efficient mixed programming approach yet. This allows scientists and engineers to concentrate on tackling the world's most pressing issues. For AI inference workloads, GH200 Grace Hopper Superchips combines with NVIDIA networking technologies to offer the most cost-effective scaling solutions, empowering users to handle larger datasets, more intricate models, and new tasks with access to up to 624GB of high-speed memory. For AI training, up to 256 NVLink-connected GPUs can access up to 144TB of memory at high bandwidth for large language model (LLM) or recommender system training.

1U with Grace Hopper |

1U with Grace Hopper LC |

1U 2-Node with Grace Hopper |

1U 2-Node with Grace CPU |

2U with Grace CPU |

2U with X86 DP |

|

|---|---|---|---|---|---|---|

|

|

|

|

|

|

|

| Model | ARS-111GL-NHR | ARS-111GL-NHR-LCC | ARS-111GL-DNHR-LCC | ARS-121L-DNR | ARS-221GL-NR | SYS-221GE-NR |

| CPU | 72-core Grace Arm Neoverse V2 CPU + H100 Tensor Core GPU in a single chip | 72-core Grace Arm Neoverse V2 CPU + H100 Tensor Core GPU in a single chip | 72-core Grace Arm Neoverse V2 CPU + H100 Tensor Core GPU in a single chip per node | 144-core Grace Arm Neoverse V2 CPU + H100 Tensor Core GPU in a single chip per node (total of 288 cores in one system | 144-core Grace Arm Neoverse V2 CPU + H100 Tensor Core GPU in a single chip | 4th or 5th Generation Intel Xeon Scalable Processors |

| Cooling | Air-cooled | Liquid-cooled | Liquid-cooled | Air-cooled | Air-cooled | Air-cooled |

| GPU Support | NVIDIA H100 Tensor Core GPU with 96GB of HBM3 | NVIDIA H100 Tensor Core GPU with 96GB of HBM3 | NVIDIA H100 Tensor Core GPU with 96GB of HBM3 per node | Please contact for possible configurations | Up to 4 double-width GPUs including NVIDIA H100 PCIe, H100 NVL, L40S | Up to 4 double-width GPUs including NVIDIA H100 PCIe, H100 NVL, L40S |

| Memory | CPU: 480G integrated LPDDR5X with ECC GPU: 96GB HBM3 | CPU: 480G integrated LPDDR5X with ECC GPU: 96GB HBM3 | CPU: 480G integrated LPDDR5X with ECC per node GPU: 96GB HBM3 per node | Up to 480GB of integrated LPDDR5X with ECC and up to 1TB/s of memory bandwidth per node | Up to 480GB of integrated LPDDR5X with ECC and up to 1TB/s of memory bandwidth per node | Up to 2TB, 32x DIMM Slots, ECC DDR5-4800 DIMM |

| Networking | 3x PCIe 5.0 x16 slots supporting NVIDIA Bluefield-3 or ConnectX-7 | 3x PCIe 5.0 x16 slots supporting NVIDIA Bluefield-3 or ConnectX-7 | 2x PCIe 5.0 x16 slots per node supporting NVIDIA Bluefield-3 or ConnectX-7 | 3x PCIe 5.0 x16 slots per node supporting NVIDIA Bluefield-3 or ConnectX-7 | 3x PCIe 5.0 x16 slots supporting NVIDIA Bluefield-3 or ConnectX-7 | 3x PCIe 5.0 x16 slots supporting NVIDIA Bluefield-3 or ConnectX-7 |

| Storage | 8x Hot-swap E1.S drives & 2x M.2 NVMe drives | 8x Hot-swap E1.S drives & 2x M.2 NVMe drives | 4x Hot-swap E1.S drives & 2x M.2 NVMe drives per node | 4x Hot-swap E1.S drives & 2x M.2 NVMe drives per node | 8x Hot-swap E1.S drives & 2x M.2 NVMe drives | 8x Hot-swap E1.S drives & 2x M.2 NVMe drives |

| Power Supplies | 2x 2000W Titanium Level | 2x 2000W Titanium Level | 2x 2700W Titanium Level | 2x 2700W Titanium Level | 3x 2000W Titanium Level | 3x 2000W Titanium Level |

| Configure Now | Configure Now | Configure Now | Configure Now | Configure Now | Configure Now |

Versatile Scale Out with Unmatched Performance

| Grace CPU | Feature |

|---|---|

| CPU core count | 72 Arm Neoverse V2 cores |

| L1 Cache | 64KB i-cache + 64KB d-cache |

| L2 Cache | 1MB per core |

| L3 Cache | 114MB |

| Base Frequency | all-core single instruction, multiple data (SIMD) frequency | 3.1Ghz | 3.0Ghz |

| LPDDR5X size | Up to 480GB |

| Memory bandwidth | Up to 512GB/s |

| PCIe links | Up to 4x PCIe x16 (Gen 5) |

| Hopper H100 GPU | Feature |

|---|---|

| FP64 | 34 teraFLOPS |

| FP64 Tensor Core | 67 teraFLOPS |

| FP32 | 67 teraFLOPS |

| TF32 Tensor Core | 989 teraFLOPS | 494 teraFLOPS |

| BFLOAT16 Tensor Core | 1,979 teraFLOPS | 990 teraFLOPS |

| FP16 Tensor Core | 1,979 teraFLOPS | 990 teraFLOPS |

| FP8 Tensor Core | 3,958 teraFLOPS | 1,979 teraFLOPS |

| INT8 Tensor Core | 3,958 TOPS | 1,979 TOPS |

| High-bandwidth memory (HBM) size | Up to 96GB | 144GB HBM3e |

| Memory bandwidth | Up to 4TB/s | Up to 4.9TB/s HBM3e |

| NVIDIA NVLink-C2C CPU-to-GPU bandwidth | 900GB/s bidirectional |

| Module thermal design power (TDP) | Programmable from 450W to 1000W (CPU + GPU + memory |

| Form Factor | Superchip module |

| Thermal solution | Air cooled or liquid cooled |

Industry leading Scalable Compute Unit Designed For Large Language Models

In the AI era, a unit of computing power isn't just about the number of servers anymore. Today's artificial intelligence relies on interconnected GPUs, CPUs, memory, and storage across multiple nodes in racks. This infrastructure demands high-speed, low-latency network connections, along with efficient cooling and power delivery to maintain peak performance and effectiveness in various data center settings. Supermicro's SuperCluster solution offers essential components for rapidly advancing Generative AI and Large Language Models (LLMs). This all-inclusive data center solution speeds up delivery time for critical business needs and removes the complexity of building large clusters, which used to require extensive design and optimisation work.

Supermicro's SuperCluster design for NVIDIA MGX Systems, featuring NVIDIA GH200, incorporates 400Gb/s networking fabrics with a non-blocking architecture. This setup enables each rack with 32 nodes (32 GPUs) and a 256-node cluster to function as a unified compute unit, offering a cohesive pool of high-bandwidth memory crucial for Large Language Model (LLM) high batch sizes and extensive inference tasks. Whether constructing a cloud-scale inference infrastructure for LLMs or optimising large models for training, the spine and leaf network topology allows to scale from 256 nodes to thousands of nodes. Supermicro's rigorous testing procedures thoroughly validate the cluster's operational effectiveness before shipment, ensuring customers receive plug-and-play units at the rack or multi-rack cluster level for rapid deployment.

Unser präzises Testing

Unser präzises Testing Alle Broadberry Server- und Storage-Lösungen durchlaufen vor dem Versand aus unserem Lagerhaus einen 48-stündigen Testlauf. In Kombination mit diesem Prüfverfahren sowie den hochqualitativen, branchenführenden Komponenten stellen wir sicher, dass all unsere Server- und Storage-Lösungen den strengsten Qualitätsrichtlinien entsprechen, die an uns gestellt werden.

Unübertroffene Flexibilität

Unübertroffene FlexibilitätUnser Hauptziel ist es, hochwertige Server- und Speicherlösungen zu einem hervorragenden Preis-Leistungs-Verhältnis anzubieten. Wir wissen, dass jedes Unternehmen unterschiedliche Anforderungen hat, und sind daher in der Lage, unübertroffene Flexibilität bei der Gestaltung maßgeschneiderter Server- und Speicherlösungen anzubieten, um die Bedürfnisse unserer Kunden zu erfüllen.

Wir haben uns als einer der größten Storageanbieter im Vereinigten Königreich etabliert und beliefern seit 1989 die weltweit führenden Marken mit unseren Server- und Storagelösungen. Zu unseren Kunden zählen: